In this work, we introduce Unique3D, a novel

image-to-3D framework for efficiently generating high-quality 3D meshes

from single-view images, featuring state-of-the-art generation fidelity

and strong generalizability. Previous methods based on Score

Distillation Sampling (SDS) can produce diversified 3D results by

distilling 3D knowledge from large 2D diffusion model, but they usually

suffer from long per-case optimization time with inconsistent issues.

Recent works address the problem and generate better 3D results either

by finetuning a multi-view diffusion model or training a fast

feed-forward model. However, they still lack intricate textures and

complex geometries due to inconsistency and limited generated

resolution. To simultaneously achieve high fidelity, consistency, and

efficiency in single image-to-3D, we propose a novel framework Unique3D

that includes a multi-view diffusion model with a corresponding normal

diffusion model to generate multi-view images with their normal maps, a

multi-level upscale process to progressively improve the resolution of

generated orthographic multi-views, as well as an instant

and consistent mesh reconstruction algorithm called

ISOMER, which fully integrates the color and geometric priors

into mesh results. Extensive experiments demonstrate that our Unique3D

significantly outperforms other image-to-3D baselines in terms of

geometric and textural details.

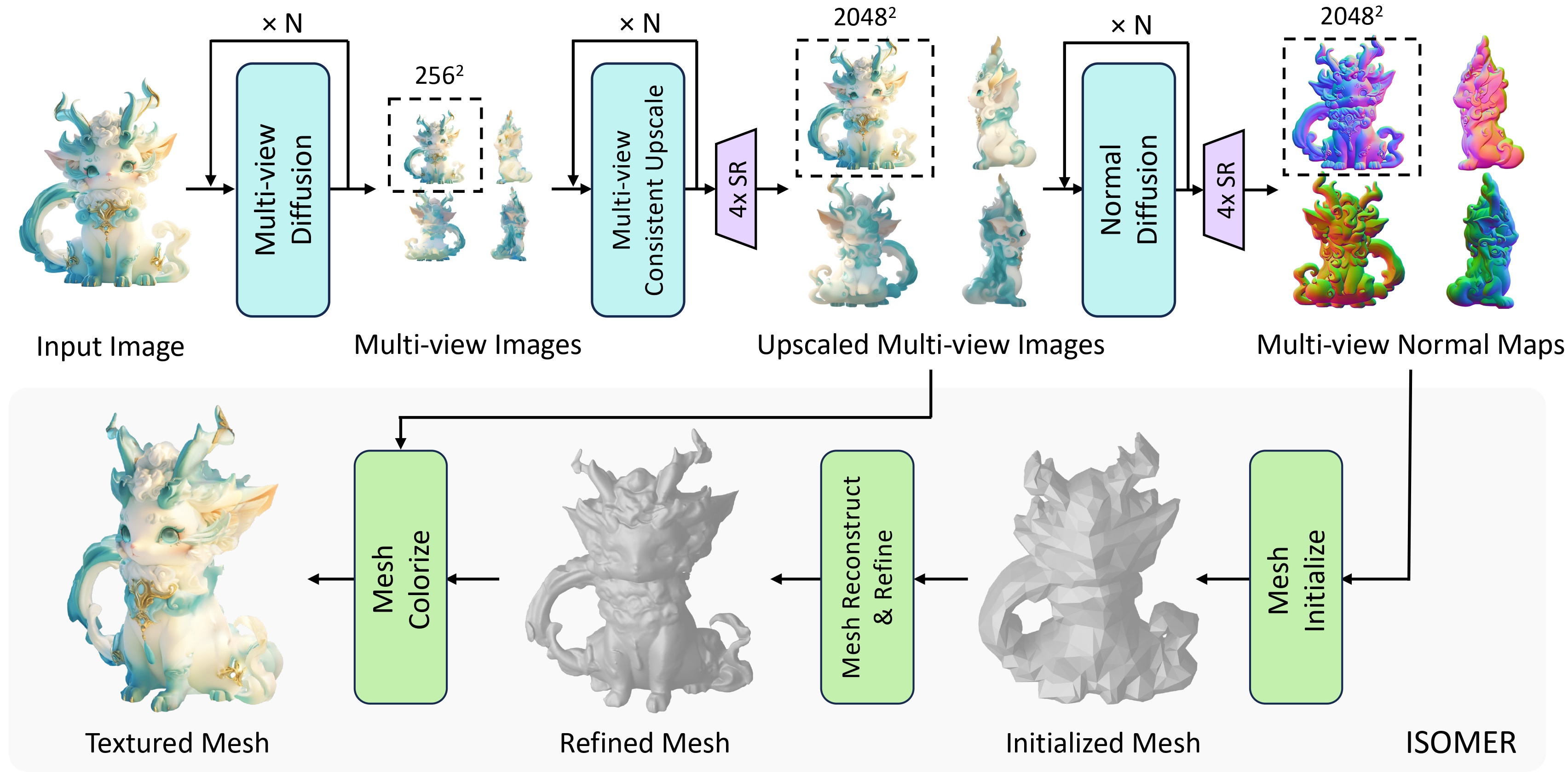

Pipeline of our Unique3D. Given a single wild image as input, we first generate four orthographic multi-view images from a multi-view diffusion model. Then, we progressively improve the resolution of generated multi-views through a multi-level upscale process. Given generated color images, we train a normal diffusion model to generate normal maps corresponding to multi-view images and utilize a similar strategy to lift it to high-resolution space. Finally, we reconstruct high-quality 3D meshes from high-resolution color images and normal maps with our instant and consistent mesh reconstruction algorithm ISOMER, which is a robust multi-view reconstruction method directly deforming the mesh and can efficiently reconstruct mesh models with millions of faces.

Pipeline of our Unique3D. Given a single wild image as input, we first generate four orthographic multi-view images from a multi-view diffusion model. Then, we progressively improve the resolution of generated multi-views through a multi-level upscale process. Given generated color images, we train a normal diffusion model to generate normal maps corresponding to multi-view images and utilize a similar strategy to lift it to high-resolution space. Finally, we reconstruct high-quality 3D meshes from high-resolution color images and normal maps with our instant and consistent mesh reconstruction algorithm ISOMER, which is a robust multi-view reconstruction method directly deforming the mesh and can efficiently reconstruct mesh models with millions of faces.

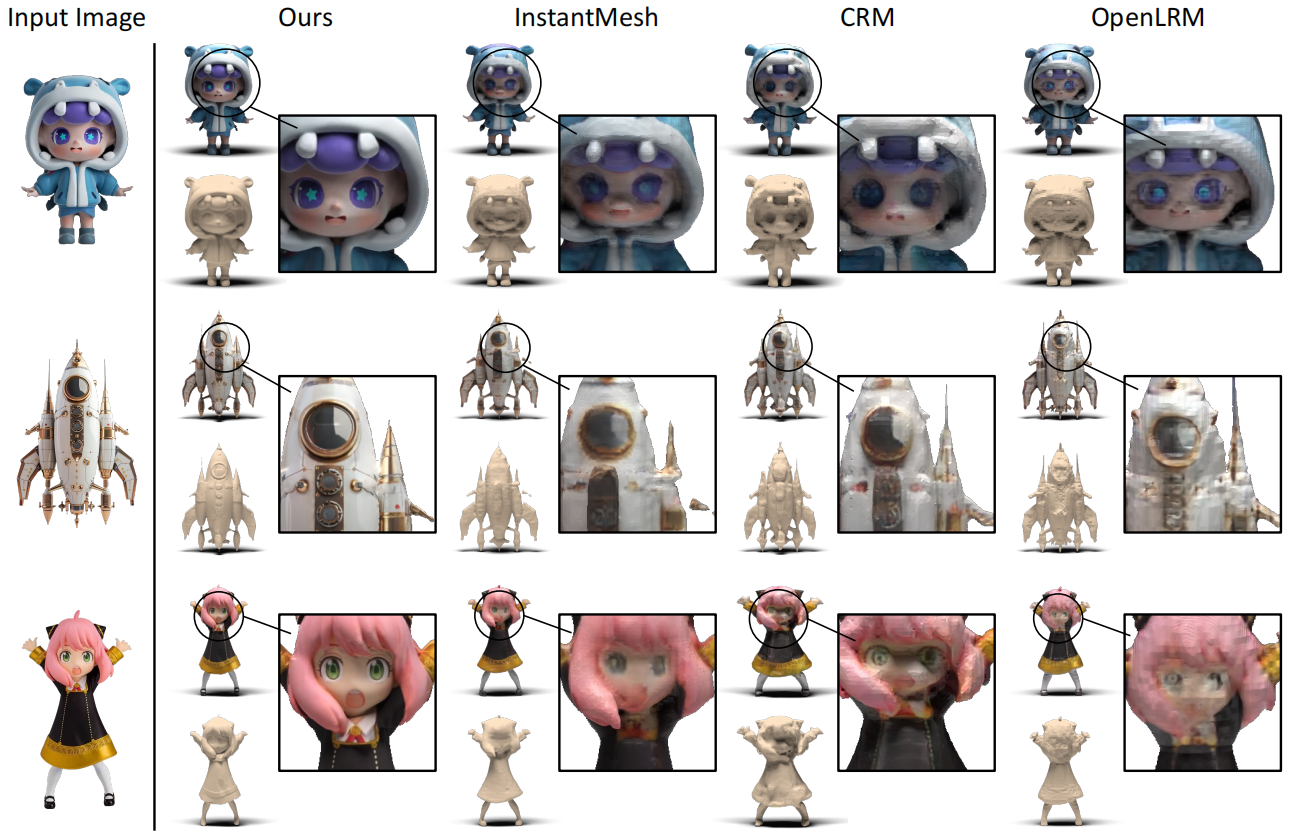

Comparison. We compare our model with InstantMesh, CRM and

OpenLRM. Our models generates accurate geometry and detailed texture.

Comparison. We compare our model with InstantMesh, CRM and

OpenLRM. Our models generates accurate geometry and detailed texture.